Artificial intelligence in medicine. The Pole is working to detect breast cancer effectively and quickly

If the machine is able to identify faces or license plates, why not use it in medicine in imaging studies? – says Dr. Marcin Sieniek about the beginning of work on harnessing artificial intelligence to analyze mammography images. Today, he can talk about the possibilities of implementing these solutions. Will AI replace doctors in the future?

Katarzyna Świerczyńska, “Wprost”: AI enters almost every area of life and is already used in medicine. Will medicine in the future be able to exist without artificial intelligence?

Marcin Sieniek*: Medicine is a huge branch of the economy, here everything is fragmented into many diseases, many specializations. I would not go too far and say that AI will work in each of these areas, but where we have processes that are repeatable, where we have access to the right amount of data that is verifiable, I think that artificial intelligence will be areas more and more helpful.

It should be noted, however, that medicine is a rather conservative field of science, with each novelty having to undergo a lot of validation and certification. AI will play a big role in medicine in the future, I’m sure, but for now, it will definitely take time.

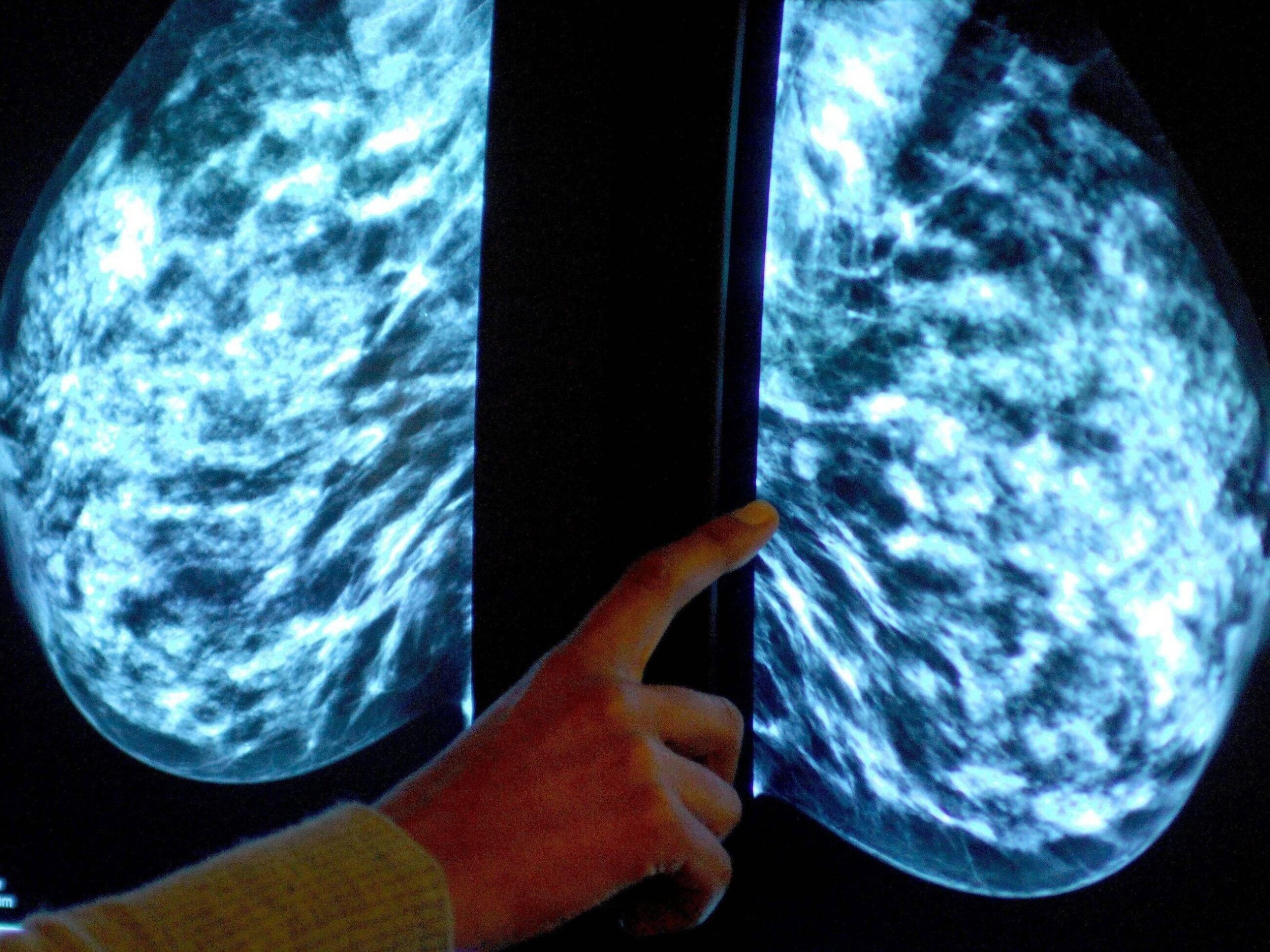

You are working on one of these projects. It’s about using AI for mammography and early detection of breast cancer.

Diagnostics, and screening programs in particular, are indeed an area where we can do a lot with the support of AI, because here we work on analyzing large amounts of structured data.

In the case of a breast cancer screening program, we can train the AI to answer one question: does the patient have an increased risk of breast cancer, or is the cancer she has been diagnosed with already developing?

In this sense, mammography is a relatively easier domain for the use of AI. Although not all medical images have this feature: only on the basis of chest imaging, a doctor can diagnose 200 different diseases, which until recently was beyond the capabilities of machine learning.

And now AI will be able to do all this for a doctor?

Not completely. AI can improve the accuracy of diagnoses, speed up the process and reduce costs. But doctors have a much bigger role to play even if diagnostic devices are autonomous.

AI can improve the accuracy of diagnoses, speed up the process and reduce costs. But I see one more important aspect: thanks to the use of AI, we will be able to increase the availability of screening programs.

This is especially important in countries where there is a shortage of radiologists, where the waiting time for this diagnosis is too long. Anyway, these things are already happening. For example, it is one of the most mature programs implemented by Google, i.e. a system for the autonomous diagnosis of diabetic retinopathy in people with diabetes, which is being successfully implemented in India, and we are now working with the Thai government on this issue. So these are the first examples where AI has actually been deployed on a large scale.

Let’s go back to your project. How does the work on AI help to effectively and quickly catch women at risk of breast cancer?

When we started five years ago, it was a tiny team of two engineers – me and my colleague – and one radiologist. We put forward the thesis from the very beginning that deep machine learning is able to help detect lesions in medical images. Because if the machine is able to identify faces or license plates, why not use it in medicine in imaging studies? Of course, all this is not so simple either, because, for example, some changes in the image are a few pixels in size, the resolution of mammograms is quite high, sometimes it is necessary to coordinate the reading of several different views at the same time. So we weren’t sure if it would work…

But it worked, and the result is publication in “Nature”, of which you are a co-author.

Yes, because our project began to develop over time, the team grew. Together with specialists from London, we managed to create a model that matched the doctors in the precision of interpretation based on historical data. This publication sparked a wide discussion, although it must be emphasized once again that at the moment we are still working on historical data, and therefore not a situation where the patient is just coming in and we have to decide what to do next. Now we are testing all this precisely in the context of the possibility of using it, so to speak, in the real world, in practice. We are currently working on certifying our model in Europe. It’s a hard and long process. In the meantime, COVID-19 also appeared and then we decided to use the technology we had in another way. We all know how the pandemic delayed and even stopped all prevention and screening programs. The waiting time for a diagnosis extended to months.

We also know it from the Polish reality. Cancer diagnosis has come to a standstill.

Exactly. Even when screening centers opened after the lockdowns, the patient would come in for a scan and then wait months for a reading. Therefore, in cooperation with a hospital in Chicago, we implemented a system that allowed you to evaluate the result of mammography right away. Then, if something disturbing appeared, the patient could stay for further diagnostics on the same day, without exposing herself to a long wait for the interpretation of the test or unnecessary risk of exposure to COVID during subsequent visits. We can boast of very good effects, which we will soon publish in the medical press. I would like to add that now also several British hospitals have received a grant for the implementation of our system. The diagnostic system there is such that each image is evaluated by two radiologists. If they disagree, another radiologist is added and there is a discussion.

In the event of staff shortages, one of the two specialists could be replaced by AI. And if there’s a discrepancy between the doctor’s assessment and the system, then there will be another doctor and a re-evaluation.

This will greatly accelerate the time of diagnosis and will be the answer to the urgent problem of lack of radiologists that the UK is facing today.

Now let me ask a more personal question. You are a computer scientist, an engineer. How did you become interested in medicine and mammography in particular?

Some things just happen. Of course, it wasn’t planned. Going to computer science studies, I didn’t think about this direction of development at all. When I started my career at Google in Poland many years ago, I was on a team looking for websites that could place our ads. Our team has achieved a lot, and in the meantime I moved to the States and started looking for a slightly more stimulating project. Several new Google Health projects were just being launched.

Then I became convinced that I would like to do something like this “with impetus”, something that in a broader sense would be really valuable, would bring positive effects for the benefit of other people, would be good for the world. It didn’t necessarily have to be a mammogram, but it was great that this band was available.

You mentioned that you are at the stage of certification in Europe of your solutions in the diagnosis of breast cancer. So when can we realistically expect that it will actually be used in hospitals – that I will go for a mammography and will know that not only the doctor, but also the system developed by your team will analyze the image?

I hope the system will be operational in hospitals by the end of this year or early next year. We have established cooperation with iCAD, a global leader in the field of medical technologies and cancer detection, whose devices are implemented in thousands of facilities in the USA and Europe, including Poland. We licensed our AI mammography research model to them. Our partner will work to validate and incorporate our AI mammography technology into their products. As a result of this implementation, the technology we have built will also be used in clinical practices to improve breast cancer detection and cancer risk assessment for more than two million people a year worldwide.

Of course, it is worth mentioning that when we talk about “medical devices”, we are not talking about physical installations, the implementation of which is associated with, for example, the purchase of expensive graphics cards. We are talking about a system that runs in the cloud. It also makes your data safe.

“Doctor Google” will be even smarter? You yourself probably know that doctors talk so maliciously about patients who try to diagnose themselves online.

I am not a supporter of self-diagnosis, but certainly artificial intelligence systems can help us on many levels. One of our products, for example, already has the option of recognizing changes on the skin based on a photo. We don’t call it a diagnosis, but users can get some idea of whether they should be concerned at all and see a doctor. In the US, you have to wait months to see a dermatologist, so this can really help.

I think many people are asking themselves today: will we be treated by artificial intelligence in the future? You are also working on projects to teach artificial intelligence systems the medical language…

There are more of these projects, I deal with, among other things, training the artificial intelligence system in the ability not only to systematize specific medical knowledge, but also to correlate it with the image.

This may allow the doctor to ask a very specific question about, for example, what drugs are recommended for a specific type of tumor. I am working on such a base system, which can then be the basis for many different medical applications aimed at both doctors and consumers.

There are certain processes where AI will develop faster. Now it’s mainly systems that support doctors. This means that in the end it is the doctor who makes the decision. But we already have systems that work – for example, the aforementioned diabetic retinopathy eye test system – which has all been approved and is operating successfully.

I think it’s a great opportunity, but also a huge responsibility.

Therefore, during our work, we always care about transparency and work closely with the scientific and medical community. I believe that in the future, doctors will actually be able to focus primarily on making decisions, on the most difficult cases. Artificial intelligence will support them in more mechanical activities and data analysis.

*Dr. Marcin Sieniek, Google Staff Software Engineer – head of a team of engineers working on the use of AI in cancer diagnosis.